Here’s some infrastructure diagrams for our major sytems - they’re simplified for brevity and becuase it was super inconvenient to do any complicated layouts with the diagrams package, which made me slightly sad.

Backend

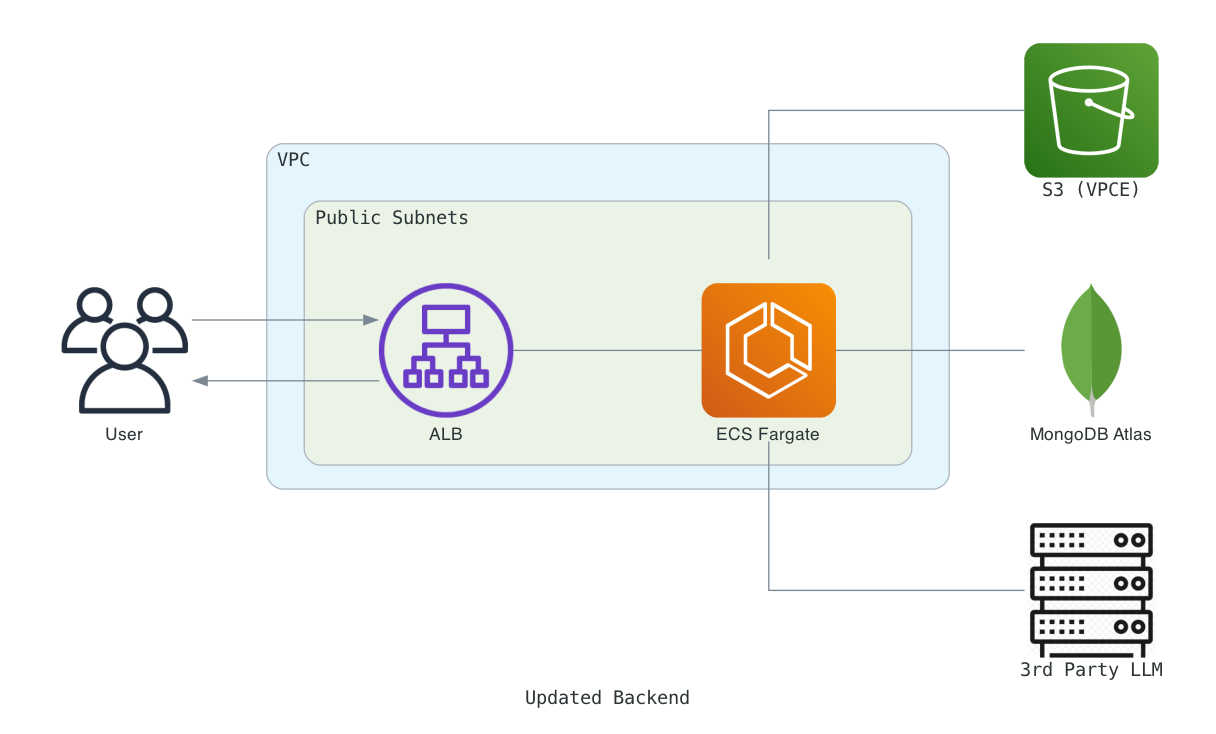

For the backend, we use GitHub Actions to build Docker images, push them to ECR, and alert ECS Fargate to run a blue-green deployment using the new image

ECS Fargate deploys containers based on compute (vCPU, RAM), abstracting away the underlying instances

Fargate maintains our desired container count and autoscales when needed

Most backend traffic stays on the AWS network via:

a VPC Endpoint to S3

VPC Peering to our MongoDB Atlas cluster

We also send HTTPS traffic over the public network to connect to 3rd-party LLM Providers

We also played with hosting 70B models on EC2 g5 instances with HuggingFace TGI and vLLM

But the frontier lab APIs are just so performant (model + throughput), cheap, and convenient, so we tend to use those

Code

from diagrams import Cluster, Diagram, Edgefrom diagrams.aws.compute import ECSfrom diagrams.aws.network import ALBfrom diagrams.aws.storage import S3from diagrams.generic.compute import Rackfrom diagrams.onprem.client import Usersfrom diagrams.onprem.database import MongoDB# Attributes for stylingattr = {"fontsize": "14","fontname": "Monospace",}c_attr = {"margin": "18","fontsize": "14","fontname": "Monospace",}# Diagram constructionwith Diagram("Updated Backend", show=False, filename="backend", graph_attr={"ratio": "0.5625","pad": "0.2",**attr, },):# Users users = Users("User")# Outside VPC s3 = S3("S3 (VPCE)", **attr) mongo_db = MongoDB("MongoDB Atlas") third_party_llm = Rack("3rd Party LLM", **attr)with Cluster("VPC", graph_attr=c_attr):with Cluster("Public Subnets", graph_attr=c_attr): alb = ALB("ALB") ecs_fargate = ECS("ECS Fargate")# Removing just cos the diagrams package is such a pain to use for laying out diagrams...# with Cluster("Private Subnets", graph_attr=c_attr):# self_hosted_llm = Rack("Self-hosted LLM (Q5)")# Connections based on the sketch alb - ecs_fargate# ecs_fargate - self_hosted_llm ecs_fargate - s3 ecs_fargate - mongo_db ecs_fargate - third_party_llm users << alb users >> alb

Backend

Frontend

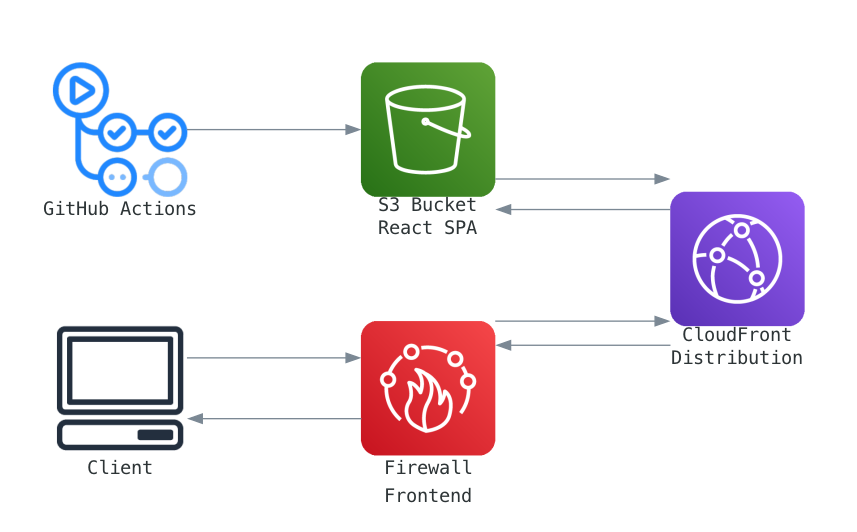

The frontend infra is minimal - we use GitHub Actions to build a React SPA bundle, push it to S3, and invalidate the CloudFront Distribution cache

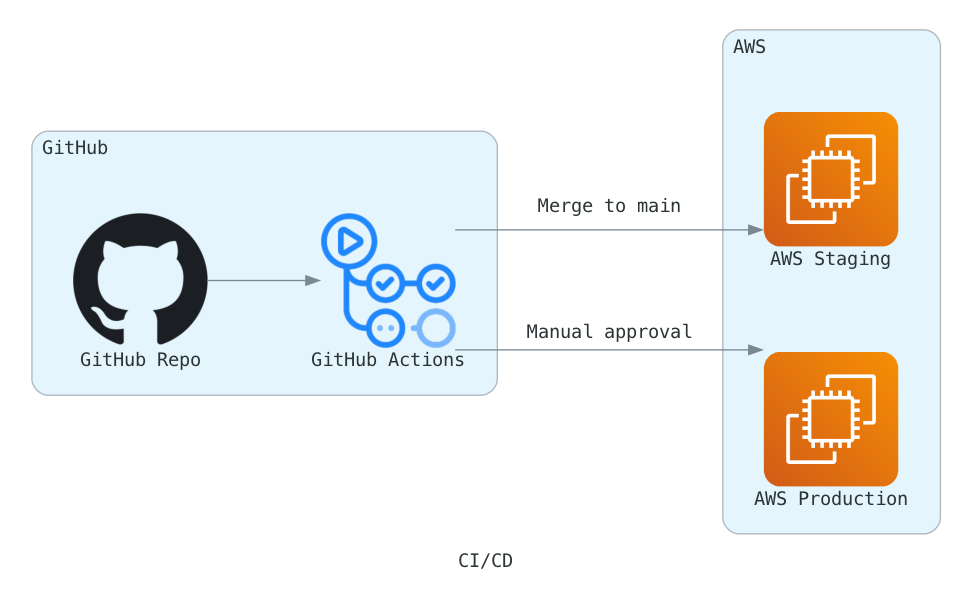

We do all our linting, building, and unit testing in GitHub Actions (GHA), then push immutable artifacts (e.g. bundle for frontend, image for backend) to our staging environment

This has been my favorite CI/CD setup. Before, I mostly used CodePipeline, but making the switch to GHA has been great!

We test the new build in staging then click a button to update prod

---title: "(3 of 6) CHRT.com - Infra Diagrams"author: "Aaron Carver"description: "Autopilot for Analytics"date: "Nov 10 2024"date-format: "MMMM YYYY"# image: "img/image.jpg"# image-alt: a great imagecategories: [Projects, Full Stack, Code, CHRT]lightbox: trueformat: html: code-fold: true code-tools: source: true toggle: false caption: none---## (Significantly Simplified) Infra DiagramsHere's some infrastructure diagrams for our major sytems - they're simplified for brevity and becuase it was super inconvenient to do any complicated layouts with the [diagrams](https://diagrams.mingrammer.com/) package, which made me slightly sad.### Backend- For the backend, we use GitHub Actions to build Docker images, push them to ECR, and alert ECS Fargate to run a blue-green deployment using the new image - ECS Fargate deploys containers based on compute (vCPU, RAM), abstracting away the underlying instances - Fargate maintains our desired container count and autoscales when needed- Most backend traffic stays on the AWS network via: - a VPC Endpoint to S3 - VPC Peering to our MongoDB Atlas cluster- We also send HTTPS traffic over the public network to connect to 3rd-party LLM Providers - We also played with hosting 70B models on EC2 g5 instances with HuggingFace TGI and vLLM - But the frontier lab APIs are just so performant (model + throughput), cheap, and convenient, so we tend to use those```{python}from diagrams import Cluster, Diagram, Edgefrom diagrams.aws.compute import ECSfrom diagrams.aws.network import ALBfrom diagrams.aws.storage import S3from diagrams.generic.compute import Rackfrom diagrams.onprem.client import Usersfrom diagrams.onprem.database import MongoDB# Attributes for stylingattr = {"fontsize": "14","fontname": "Monospace",}c_attr = {"margin": "18","fontsize": "14","fontname": "Monospace",}# Diagram constructionwith Diagram("Updated Backend", show=False, filename="backend", graph_attr={"ratio": "0.5625","pad": "0.2",**attr, },):# Users users = Users("User")# Outside VPC s3 = S3("S3 (VPCE)", **attr) mongo_db = MongoDB("MongoDB Atlas") third_party_llm = Rack("3rd Party LLM", **attr)with Cluster("VPC", graph_attr=c_attr):with Cluster("Public Subnets", graph_attr=c_attr): alb = ALB("ALB") ecs_fargate = ECS("ECS Fargate")# Removing just cos the diagrams package is such a pain to use for laying out diagrams...# with Cluster("Private Subnets", graph_attr=c_attr):# self_hosted_llm = Rack("Self-hosted LLM (Q5)")# Connections based on the sketch alb - ecs_fargate# ecs_fargate - self_hosted_llm ecs_fargate - s3 ecs_fargate - mongo_db ecs_fargate - third_party_llm users << alb users >> alb```### Frontend- The frontend infra is minimal - we use GitHub Actions to build a React SPA bundle, push it to S3, and invalidate the CloudFront Distribution cache ```{python}from diagrams import Diagramfrom diagrams.aws.network import CloudFrontfrom diagrams.aws.security import FirewallManagerfrom diagrams.aws.storage import S3from diagrams.onprem.ci import GithubActionsfrom diagrams.onprem.client import Clientattr = {"fontsize": "14","fontname": "Monospace",}with Diagram("Frontend", show=False, filename="frontend", graph_attr={"ratio": "0.5625", # 0.5625, compress"pad": "0.2",**attr },): gha = GithubActions("GitHub Actions", **attr) s3 = S3("S3 Bucket\nReact SPA", **attr) cf = CloudFront("CloudFront\nDistribution", **attr) firewall = FirewallManager("Firewall", **attr) client = Client("Client", **attr) gha >> s3 s3 << cf s3 >> cf client << firewall << cf client >> firewall >> cf```### CI/CD- We do all our linting, building, and unit testing in GitHub Actions (GHA), then push immutable artifacts (e.g. bundle for frontend, image for backend) to our staging environment - This has been my favorite CI/CD setup. Before, I mostly used CodePipeline, but making the switch to GHA has been great!- We test the new build in staging then click a button to update prod```{python}from diagrams import Cluster, Diagram, Edgefrom diagrams.aws.compute import EC2from diagrams.onprem.ci import GithubActionsfrom diagrams.onprem.vcs import Githubattr = {"fontsize": "14","fontname": "Monospace",}c_attr = {"margin": "18","fontsize": "14","fontname": "Monospace",}with Diagram("CI/CD", show=False, filename="cicd", graph_attr={"ratio": "0.5625", # 0.5625, compress"pad": "0.2",**attr },):with Cluster("GitHub", graph_attr={**c_attr}): repo = Github("GitHub Repo", **attr) actions = GithubActions("GitHub Actions", **attr)with Cluster("AWS", graph_attr={**c_attr}): staging = EC2("AWS Staging", **attr) production = EC2("AWS Production", **attr) repo >> actions actions >> Edge(label="Merge to main", **attr) >> staging actions >> Edge(label="Manual approval", **attr) >> production```### Less Simplified Diagram - TODO - add whiteboard diagram--- - Full Series: [CHRT - Autopilot for Analytics (6 posts)](/blog.html#category=CHRT) - Previous: [(2 of 6) CHRT.com - Repos, Demos](/posts/2024/chrt/02_repos_demos.html) - Next Post: [(4 of 6) CHRT.com - IaC via CloudFormation](/posts/2024/chrt/04_iac_cloudformation.html)