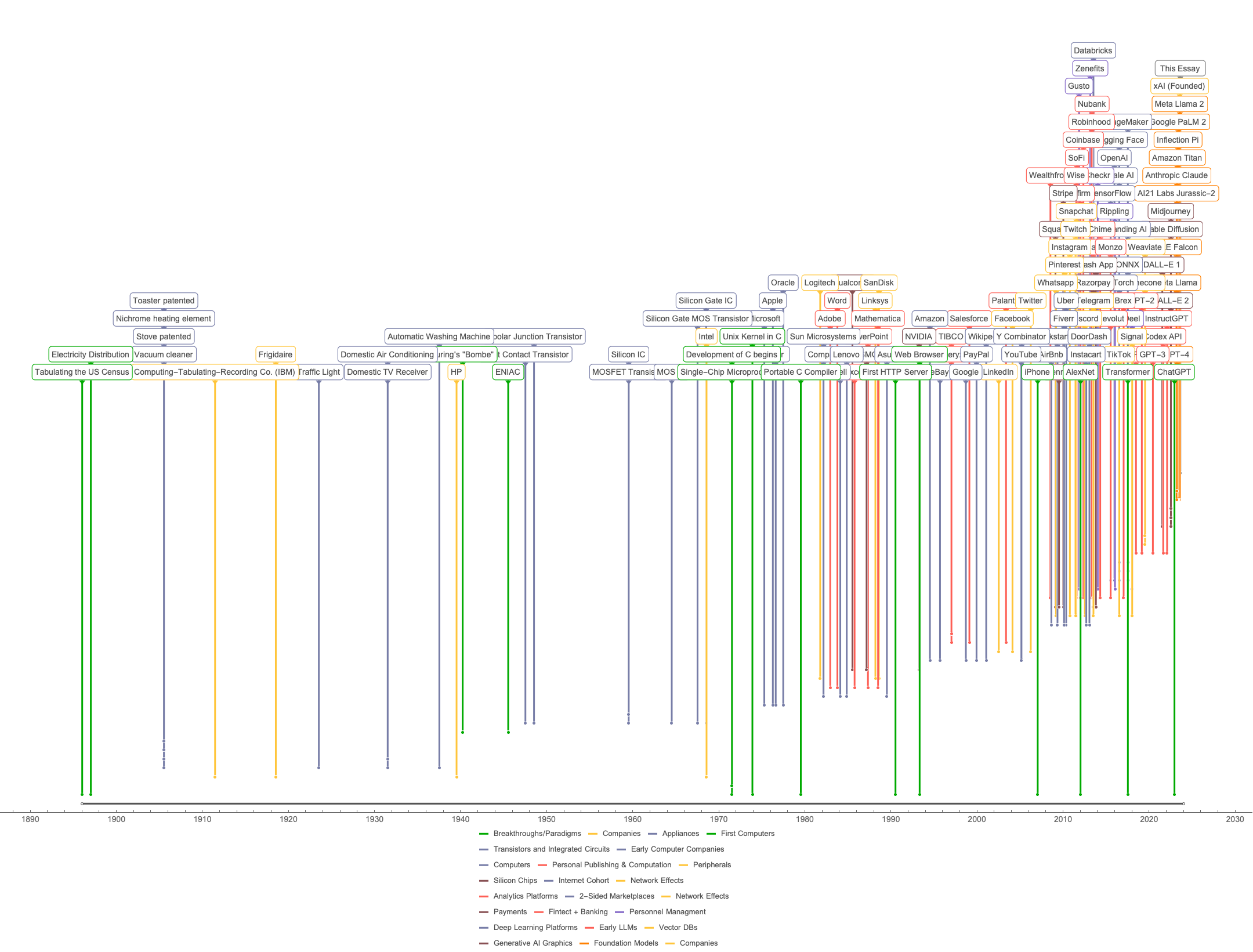

The distribution of electricity resulted in an endless evolution of electricity-powered appliances and applications. Since then, the arrival of a powerful new technologies has also repeatedly been followed by the creation of many successful products and companies. This cycle is often called the “electricity metaphor”.

In the 1970’s, Personal Computing was the “new electricity”. In the 1990’s, The Internet was the “new electricity”. And now, Artificial Intelligence is the “new electricity”.

Electricity - Energy over the wire.

Personal Computing - A bicycle for the mind.

The Internet - Information over the wire.

AI - Intelligence over the wire.

I’ve put together some lists of cohorts of products and companies built at the time that each “new electricity” emerged, including:

- Personal Computing Becomes Possible (1971-1979)

- Personal Computing Becomes Popular (1979-1993)

- Information Over the Wire: The Internet Begins (1993-2006)

- Information Over the Wire: The Internet Continues (2007-2018)

- Intelligence Over the Wire: AI Starts to Work (2012-2022)

- Intelligence Over the Wire: ChatGPT and Beyond (2022-?)

- p.s. Microprocessor and C to ChatGPT (1971-2022)

- p.p.s. Wait, what happened before the Microprocessor and C?

Last Movers

The lists that follow are far from exhaustive - they’re generally lists of the founding moments of companies, products, and technologies which are still in popular use today.

They’re just meant to illustrate the pattern that when a new technology emerges, many important products and companies are then built using it. In other words, these are lists of “last movers” not “first movers”. E.g. the lists highlight:

- the C programming language, but not the languages that predate it

- Google, but not the search engines that predate it

- AlexNet, but not the neural networks that predate it

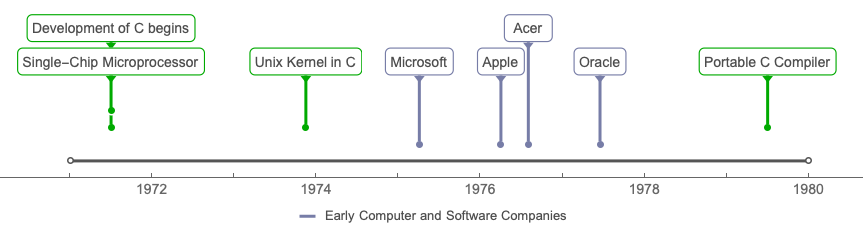

Personal Computing Becomes Possible (1971-1979)

We’ll start in 1971 when the first microprocessor was created. The era before microprocessors is covered in the postscripts.

Once the microprocessor was created, the personal computer became feasible. In the beginning, there were programming languages and kids in garages trying to make devices to use them. Among the most successful efforts were, of course, C, Microsoft, and Apple.

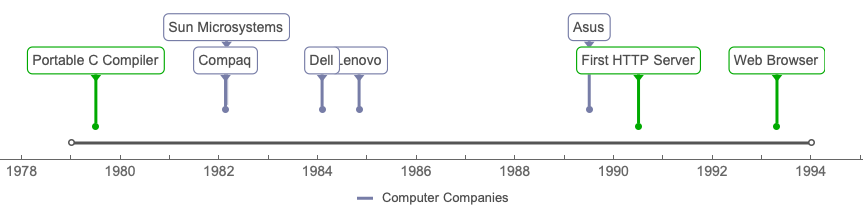

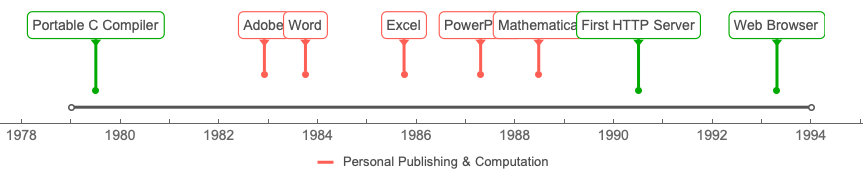

Personal Computing Becomes Popular (1979-1993)

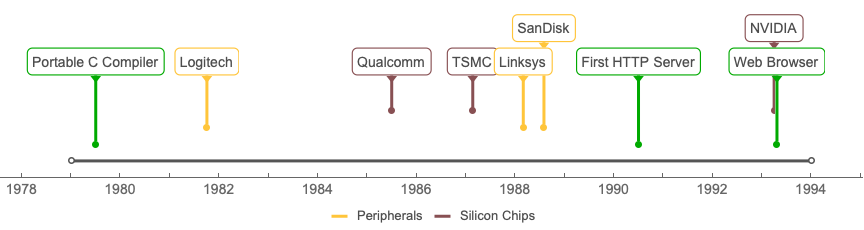

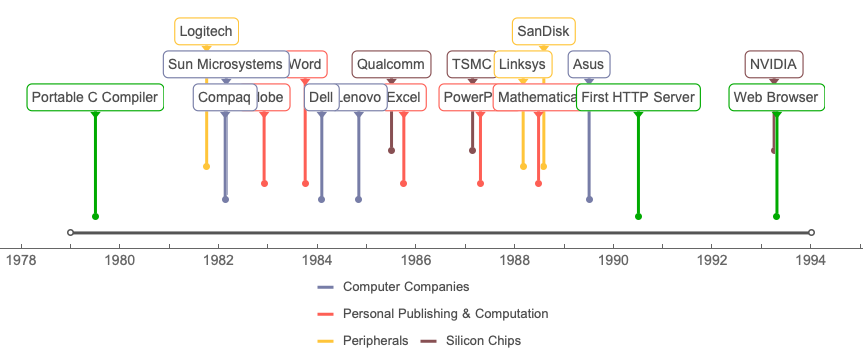

Computing devices started to spread. Between the creation of the portable C compiler and the first HTTP server, many still-powerful companies and products emerged in the areas of computers, personal publishing & computation, and peripherals & silicon chips:

All together:

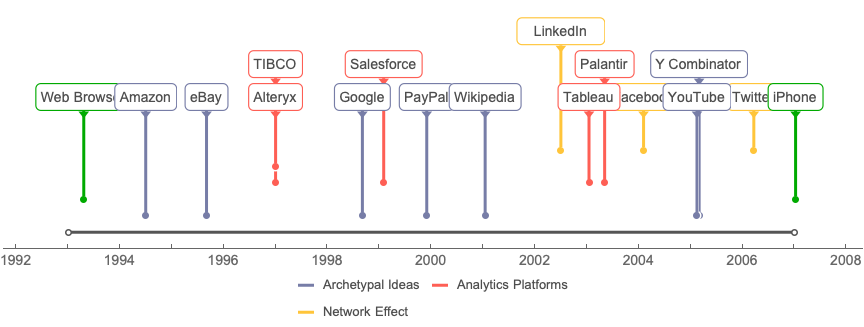

Information Over the Wire: The Internet Begins (1993-2006)

The opportunity of the internet was that a company could have millions (or billions!) of customers around the world. The challenge was handling all those interactions and the data produced by them. I’m simplifying a bit here, but there’s approximately one huge winner for each archetypal idea, which I’ve noted below.

These “winners” typically executed what I’ll call the “easy parts” the “hard parts” of their respective services. Usually, other companies in the same space could do the “easy part”, but failed to excel at the “hard part”. In lieu of providing an analogy, let’s just go through the list:

Archetypal Ideas

Amazon, 1994: people buying things from a company online

- easy part (selling): listing items online

- hard part (selling): understanding user behavior through massive data collection and processing

- easy part (shipping): putting things in boxes and sending them to people

- hard part (shipping): understanding operations though electronic tracking of inventory and fulfillment

eBay, 1995: people buying things from one another online

- easy part: holding an online auction

- hard part: showing users what they actually wanted to find within a long-tailed catalog

Google, 1998: people finding things online

- easy part: showing users suggestions for pages to visit

- hard part: knowing which pages are important

PayPal, 1998: people sending money online

- easy part: moving money between accounts

- hard part: knowing which transactions are real and which are fraudulent

Wikipedia, 2001: people curating knowledge online

- easy part: hosting openly-editable pages of information

- hard part: showing (almost) exclusively true information

YouTube, 2005: people publishing videos online

- easy part: hosting videos on servers

- hard part: identifying copyrighted content, gory and pornographic material, and not-safe-for-children material

Analytics Platforms

Alteryx and TIBCO, 1997 + Tableau, 2003 - Data analytics for businesses

- easy part: data analytics

- hard part: (a) upskilling workers to use software for managing data analytics automation and (b) handling the scattered data landscape common in enterprise environments

Salesforce, 1999 - CRM

- easy part: data analytics

- hard part: making enterprise software that’s actually easy to implement and convincing customer to moving from CD-delivered software to SaaS

Palantir - 2003, Data analytics for governments

- easy part: data analytics

- hard part: handling data provenance and fine-grained user permissions for highly-sensitive data

Network Effects

LinkedIn - 2002, Real identity online - professional

&

Facebook - 2004, Real identity online - personal

- easy part: get some people to use the site

- hard part: (a) definitively winning the “network effect” game by getting everyone to use the site, (b) handling the ensuing network traffic and data

Y Combinator - 2005, Starting Startups

- easy part: writing checks to founders

- hard part: (a) finding, selecting, and funding enough great founders, and (b) helping them succeed often enough to create a network which provides a disproportionate advantage for future founders to join

Twitter - 2006, “Town Square” online

- easy part: publish short messages

- hard part: maintain civility, free speech, and clarity of fact/fiction (they never did the hard part, but the new ownership has stated the intent to do the hard part now…)

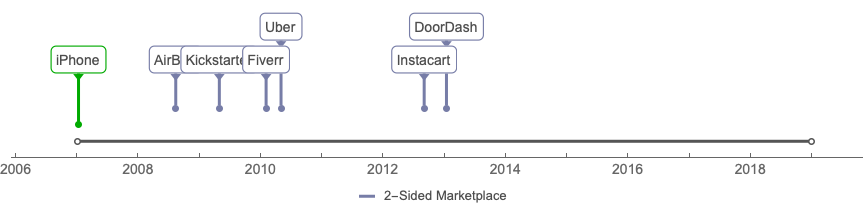

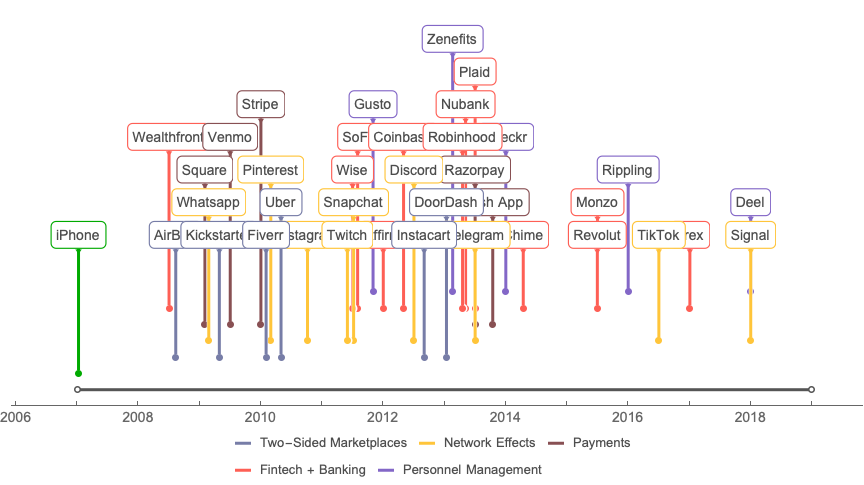

Information Over the Wire: The Internet Continues (2007-2018)

The number of users grew drastically as mobile phones allowed the internet to be used on the go, on the couch, etc. and as high-speed internet became ubiquitous.

Connecting People: Two-Sided Marketplaces and Network Effects

Marketplaces were established for staying in someone’s house (AirBnb), riding in someone’s car (Uber), getting tasks done (Fiverr), picking up groceries (Instacart), picking up takeout (DoorDash), etc.

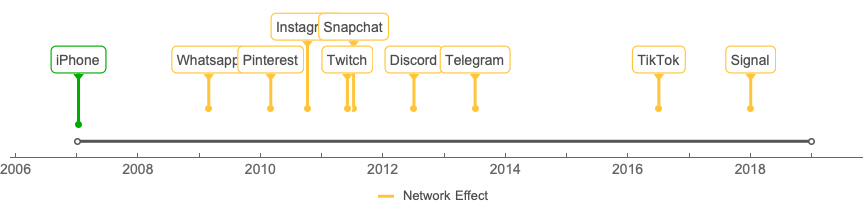

And networks were created to allow people to share messages between individuals and small groups (Whatsapp, Telegram, Signal), photos of cool stuff (Pinterest), one’s own photos and short videos (Instagram, TikTok), one’s own photos for a limited time (Snapchat), livestreams (Twitch), messages between large groups (Discord), etc.

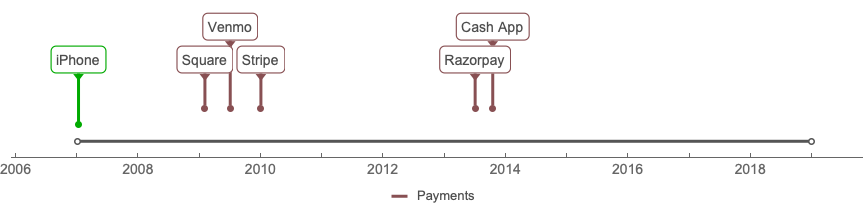

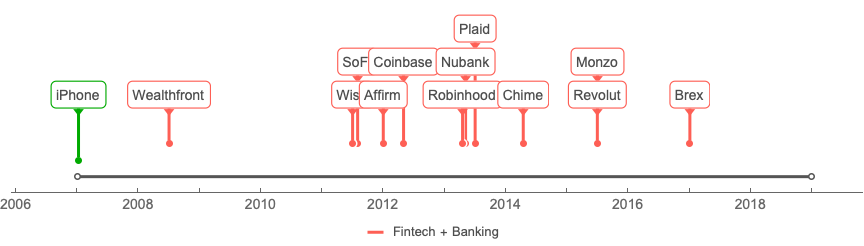

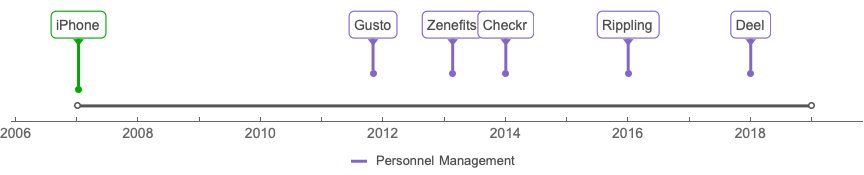

Finance: Payments, Fintech + Banking, Personnel Management

Financial services and the like moved online across categories like payments, fintech and banking, and personnel management.

All Together:

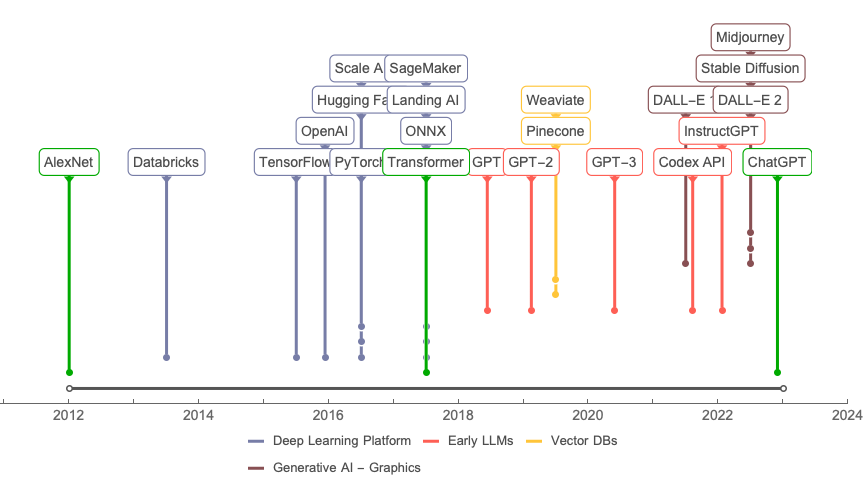

Intelligence Over the Wire: AI Starts to Work (2012-2022)

AlexNet and Deep Learning Platforms

The theory for the type of Artificial Intelligence and Artificial Neural Networks that are popular in 2023 perhaps dates back to McCulloch and Pitts’ 1943 paper “A Logical Calculus of the Ideas Immanent in Nervous Activity” and Turing’s 1950 paper “Computing Machinery and Intelligence”. And the first implementation dates back to Rosenblatt’s 1957 work “The Perceptron: A Perceiving and Recognizing Automaton”.

But artificial neural networks weren’t powerful enough to do anything particularly “useful” until 2012 when AlexNet, an eight-layer convolutional neural net, was able to classify 1.2 million images into 1,000 different classes (e.g. cat, person, chair) with drastically better results than any previous model.

Many efforts built upon the ideas of “deep” convolutional neural networks. The first to surpass human1 performance of 5.1% “top-5 error” was the ResNet model published by Microsoft Research in 2015 with a “top-5 error” of 4.94%.

Several commercial deep learning platforms were created soon after to allow companies to use data to train neural nets and to commercialize those models in products.

The Transformer Architecture and Generative AI

Then, in 2017, researchers at Google introduced the “transformer” architecture in the paper “Attention is All You Need”. Transformers performed significantly better at “reading” and “writing” long sequences of information, “paying attention” to multiple aspects of data simultaneously, and applying what they’d “learned” from one dataset to another dataset.

In 2018, OpenAI published their results from experimenting with using transformers for natural language understanding. Using transformers, they pre-trained a single model to generate text in response to a prompt and then used creative techniques to interpret the output as something suitable for a wide variety of tasks such as question answering and sentiment analysis. In doing so, they achieved state-of-the-art results in 9 of 12 tested categories.

They followed up this work with a larger model in 2019 dubbed GPT-2 for “Generative Pre-Trained Transformer 2”. GPT-2 was drastically more powerful than its predecessor, thus OpenAI continued development of their “GPT-n” series.

Upon its release on June 11, 2020, GPT-3 was quite powerful. It became used to power hundreds of products for text-based tasks like identifying themes, generating summaries, and powering semantic search. GitHub’s Copilot product, launched in October 2021, used Codex, a “descendant” of GPT-3, to help many programmers write code. Still, the “median” computer and internet user was unaware of the GPT models.

But when ChatGPT was released in November 2022, billions of people took notice. Why? Well, ChatGPT was using not using a significantly more “powerful” neural network per se. But its model, GPT-3.5, was much better at following a user’s instructions in order to produce useful results.

If you ask a smart person a question and they don’t answer it, that’s not very useful. The same is true for AI models. ChatGPT managed to have enough power and enough alignment with users’ requests to be useful for a broad range of tasks that humans value such as summarizing text and writing simple programs.

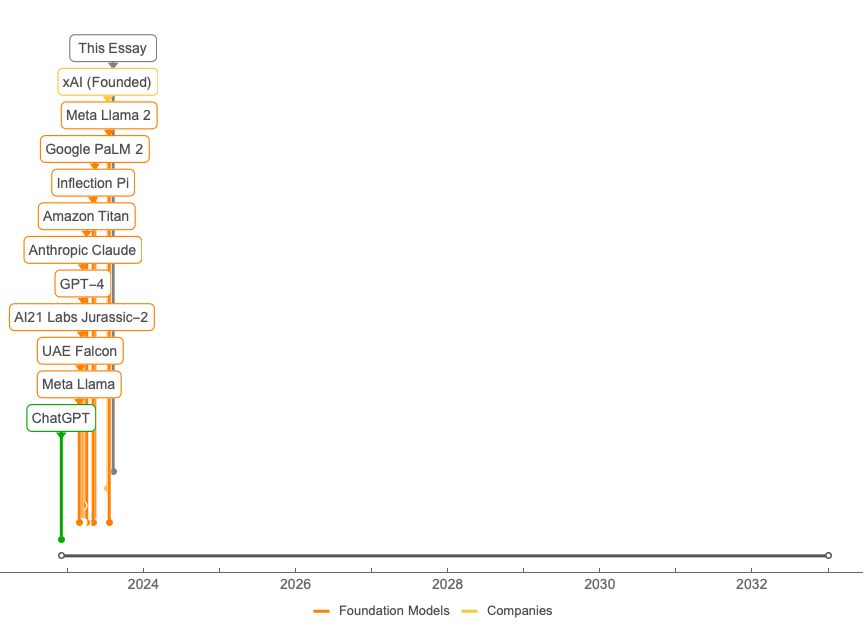

Intelligence Over the Wire: ChatGPT and Beyond (2022-?)

The immediate and immense success of ChatGPT caught many people’s attention - and their imagination. One could imagine a whole world of new software applications built with an LLM at the core. Or, as I’ve become fond of saying, applications that use LLMs as a “kernel”.

Since the release of ChatGPT, many teams built large Foundation Models. They vary somewhat in size, architecture, training corpus, etc. Some are open-sourced, some closed-source. Some are available publicly, some privately, some not at all.

There’s tremendous opportunity to build useful applications on top of these foundation models. And perhaps to also develop much more powerful foundation models. This seems to be the beginning of a new technological era.

So…what will the timeline below look like when we look back from 2033?

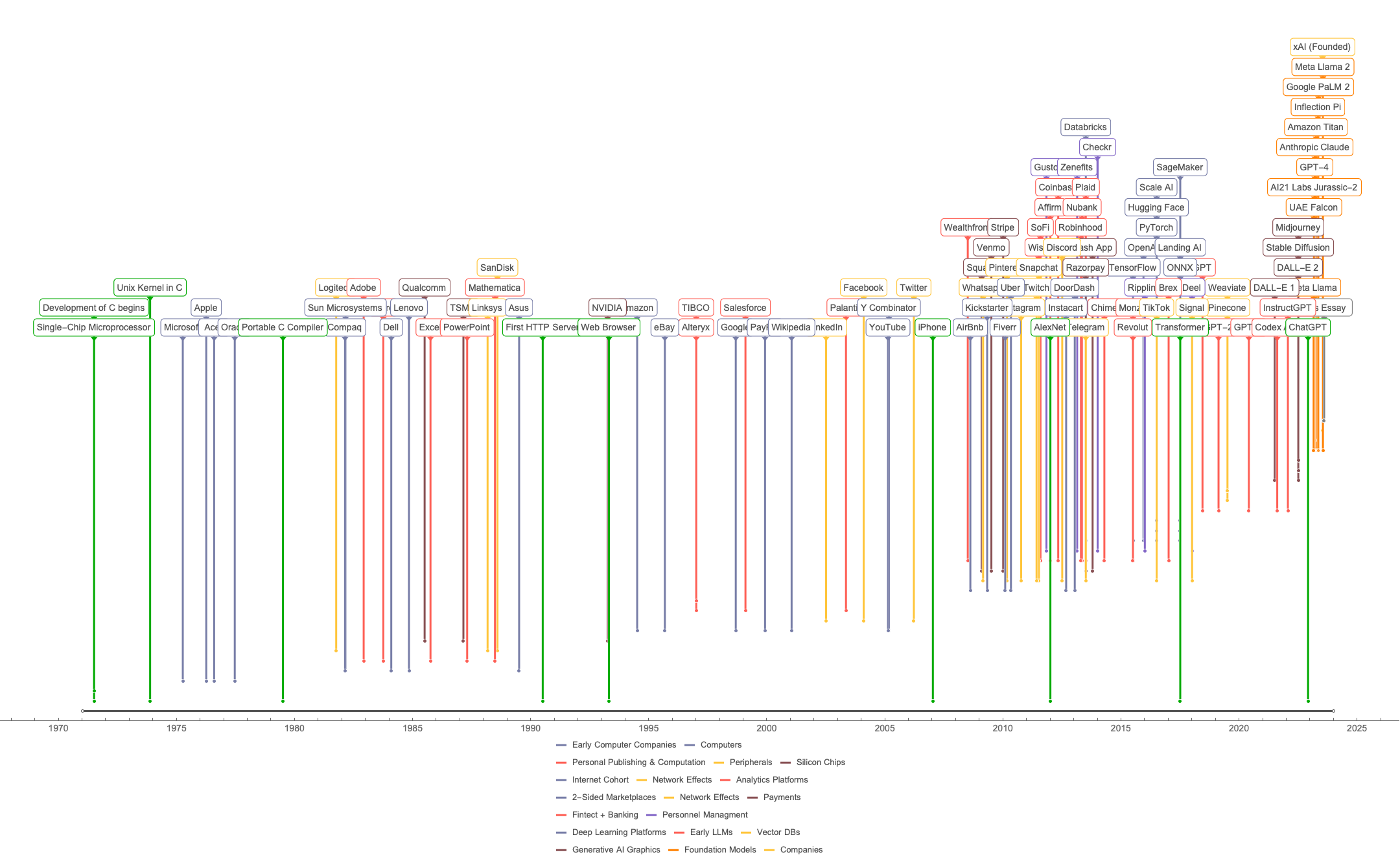

p.s. Microprocessor and C to ChatGPT (1971-2022)

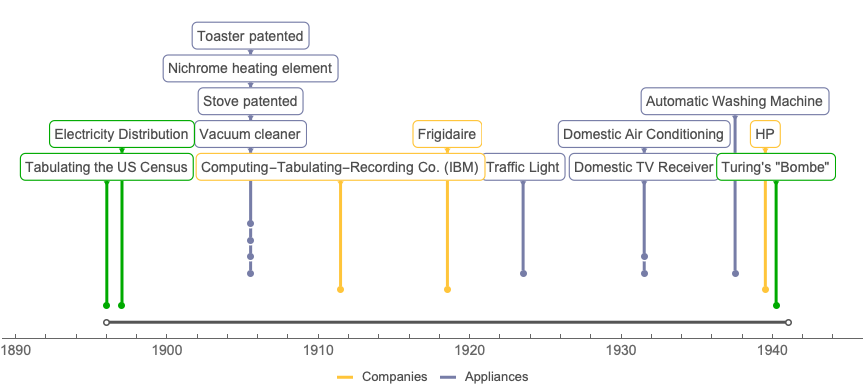

p.p.s. Wait, what happened before the Microprocessor and C?

Electricity and tabulating stuff, electrical appliances, electromechanical computers, transistors, and early computers.

Energy over the Wire: Electricity (1896-1940)

In 1896, Herman Hollerith started tabulating the US Census. In 1897, Nikola Tesla set up the first electricity distribution plant at Niagara Falls.

Things seemed to move slower back then. Hollerith eventually started a company based on this “tabulation” technology in 1911 called the “Computing-Tabulating-Recording Company”, now known as IBM.

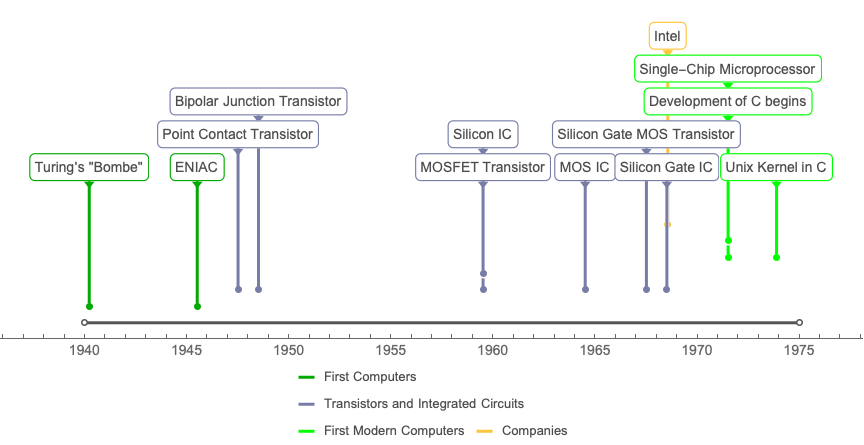

Electromechanical Computers: Turing’s “Bombe” to Unix Kernel in C (1940-1974)

Perhaps the next big thing was Turing’s electromechanical device for doing computation. After that, more computers were made. ENIAC, the first electronic programmable digital computer, was completed in 1945. It was really big and used vacuum tubes and relays. In 1946, the first transistor - a “point contact” transistor - was created. Then came the first “bipolar junction” transistor in 1947.

Jump to 1959 and the first modern transistor - the MOSFET - and silicon Integrated Circuit were created. Now we’re getting close to modern computer architecture! Transistors and Integrated Circuits improved until the Single-Chip Microprocessor emerged in 1971. Also in 1971, the development of the C Programming Language began. Then, in 1974, the Unix kernel was rewritten in C and the era of the personal computer began.

Energy, Information, and Intelligence over the Wire (1896-2022)

And here’s the whole 127-year arc:

Thanks

to Kyle and Stephanie Reagan for reviewing drafts of this.